UPDATE: ARKit 5 is here! What’s new?

The arrival of iOS 13 brings with it a new update to Apple’s SDK for Augmented Reality; ARKit 3. Months ago we wrote a post reviewing the good and bad aspects of ARKit 2, and after ARKit 3’s announcement, we were curious to see how far this new version can go.

We were able to test it along with iOS 13 developer beta for a couple of weeks and we are sharing with you our findings.

What upgrades does it bring? Is the bad side of ARKit 2 still present in ARKit 3? Let’s see what’s new for Augmented Reality app development in iOS.

Changes from ARKit 2 to ARKit 3

People Occlusion

One of the things we considered as a weakness of ARKit 2 was the impossibility of identifying whether the camera’s vision is hindered when spawning virtual objects. No matter how real the virtual object could look like, it would just remain visible even if there’s an obstacle between the object and the camera’s field of view, or if someone walked in front of it. This was something that truly broke the quality of the ilusion, and part of the ugly side of ARKit 2.

Well, this could not count as an ugly side of ARKit 3 though. Now People Occlusion entered the game and allows AR objects to be in front or behind people in a more realistic way. Give it a look:

In our test, we placed a virtual flower vase that gets hidden when you pass by or when you cover it up with your hand, as would happen with a real one. It is a sample project from Apple that you can find here.

Even though this is a huge improvement in comparison to ARKit 2, as for now it is only optimized for humans thanks to ARKit’s new awareness of people, but not for objects. If the camera’s vision is hindered because of a sheet of paper or any other material object (like a table tennis racket), then the AR content won’t behave so realistically:

Hardware Requirements

ARKit 2 already had some hardware requirements in order to make use of its features, so it’s not a surprise that the same would happen for ARKit 3. In fact, its latest features require devices at least with an A12 processor, whereas for ARKit 2 the minimum was the A9.

This means that, for instance, iPhone models previous to the iPhone XS, or just less powerful are not able to leverage ARKit 3 new capabilities.

In a nutshell, the group of supported devices is pretty reduced, which is a bummer in terms of potential market size for ARKit 3 based apps.

RealityKit & Reality Composer

In the past, there was a steep learning curve for creating realistic AR experiences. This difficulty is, somehow, reduced because of the new RealityKit that comes with ARKit 3.

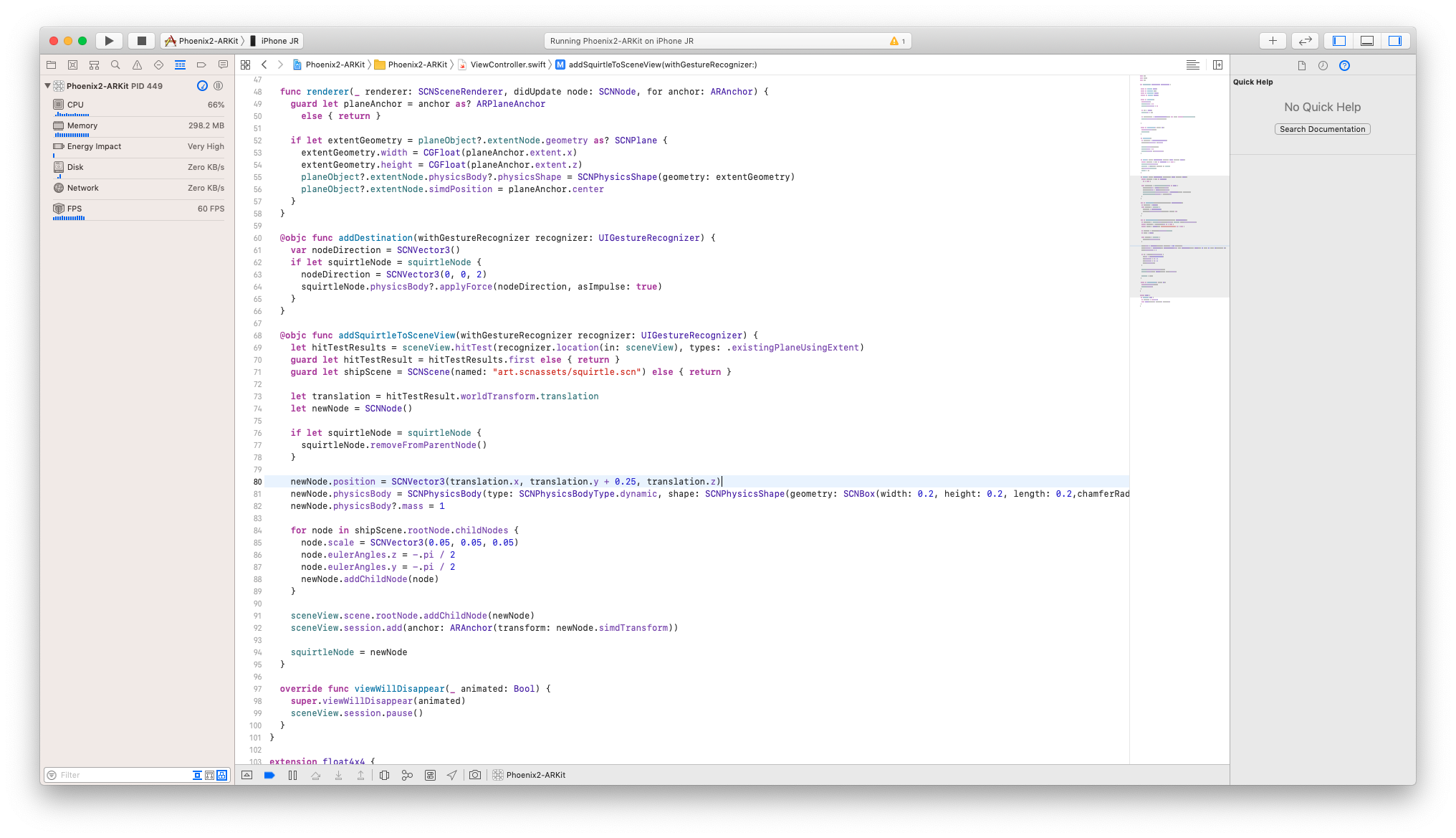

Prior to RealityKit, there was SceneKit that allowed you to build apps with AR interactions in general, such as placing virtual objects on the walls or the floor. We used this framework along when we tested ARKit 2, in order to work with 3D objects, and we had to dive into heavy concepts of 3D rendering. Take a look at this portion of code we wrote in order to spawn a virtual Squirtle with SceneKit:

Now, let’s see the same process of placing a virtual object (not a Squirtle though) but with the new RealityKit:

As you can see, this process is now much easier, and can be accomplished in a few lines of code. It’s just a matter of starting a new project with RealityKit and everything comes out-of-the-box, without the need for extra code.

Furthermore, now there is the Reality Composer, a utility app both for iOS and Mac that allows you to create and modify 3D models and is meant to be used with RealityKit. This app provides a huge variety of models, animations, and physics effects ready for you to work with.

Up until now we listed three bad aspects of ARKit 2 that received an upgrade in this new version. Now let’s see which other features have been made available with ARKit 3.

ARKit 3 New Features

Motion Capture

Above we mentioned ARKit 3’s awareness of people with regards to People Occlusion, most likely possible because of a Machine Learning model, but this new capability doesn’t stop there. Awareness of people also allows you to track human movement and use it as input for an AR app.

For instance, with Motion Capture you can replicate a person’s movement in a virtual representation, like a skeleton:

Notice that small movements, however, are not being precisely tracked, but in general is doing pretty well for a first version.

Guided Plane Detection & Improved Accuracy

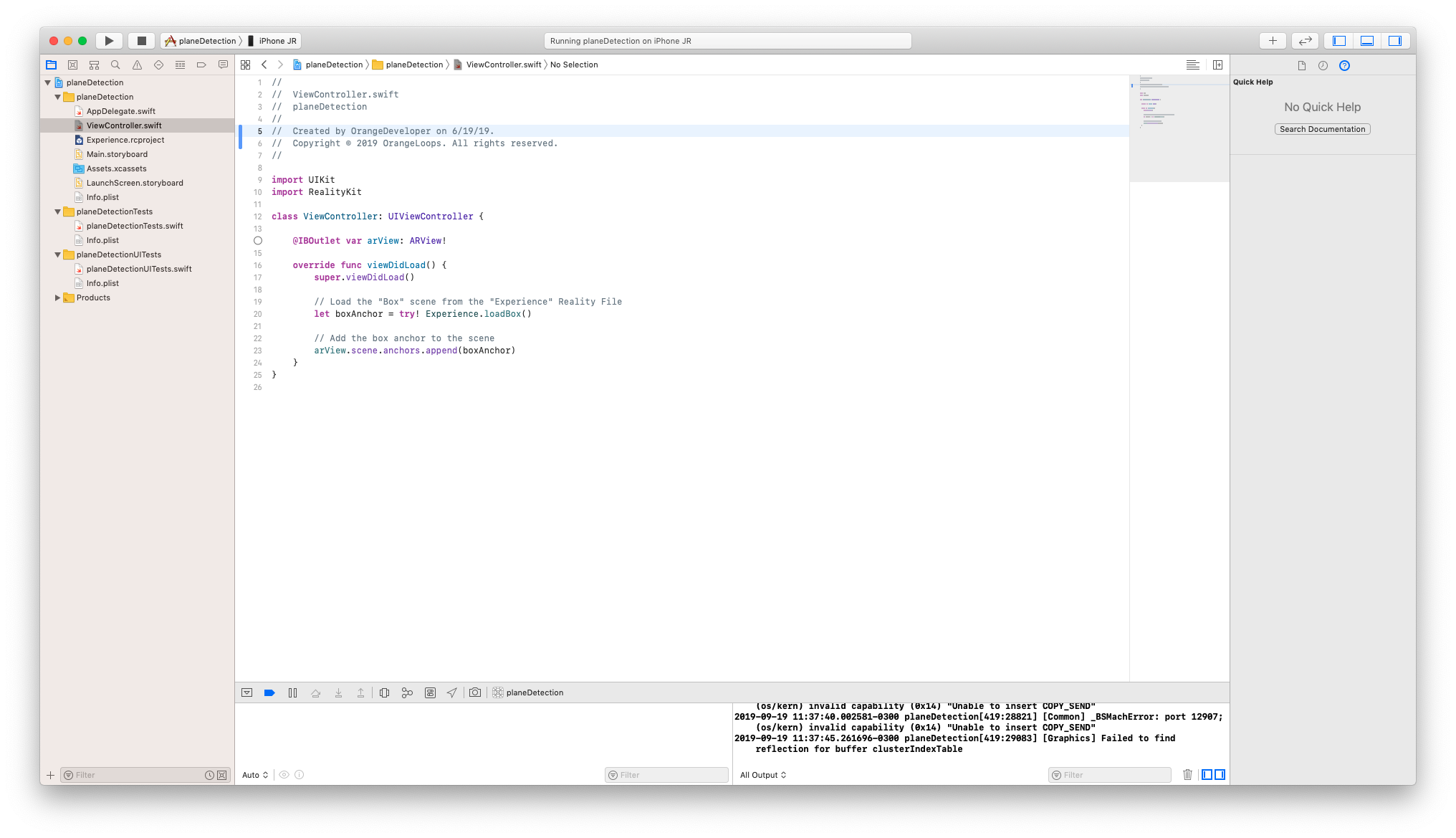

Something that was not available in ARKit 2 but would have helped a lot is support for a built-in guide for recognizing planes in the real world. In case you were looking to follow this process you had to write some code and figure it out on your own.

Now, ARKit 3 brings a simple assistant that lets you know how the plane detection is going and which one is detected, before placing any virtual object in the environment.

Here’s a comparison between a simple ARKit 2 app we made for detecting planes, and another one from ARKit 3. Notice how there’s no visual guide in the first one and the latter is also more accurate:

It may not seem a major change, but trust me when I tell you that it saves you time and simplifies the process of recognizing planes.

Simultaneous Front and Back Camera & Collaborative Sessions

Using both front and back cameras at the same time opens a new range of possibilities for AR experiences, like interacting with virtual objects by facial expressions. It was not available before because of the power consumption such feature would require, but it is now viable thanks to a new API Apple released after WWDC 2019.

With collaborative sessions, now you can dive into a shared AR world with other people, especially useful for multiplayer games, as you can see in this Minecraft demo shown on WWDC19.

Even though we were not able to test these features out, we consider them to be of high interest and that could push AR immersion even further.

Final thoughts

We’ve listed ARKit 3 major changes with regards to ARKit 2, but there’s more to ARKit 3 we have not covered such as: multiple face tracking or the ability to detect up to 100 images at a time, now faster thanks to Machine Learning advances.

We could say that, after playing around with both ARKit 2 and 3, the changes and upgrades are noticeable and welcomed. Despite the fact that it still has room for improvement, we are glad to see that Apple keeps investing in Augmented Reality support in iOS and new experiences can be implemented with each release.

Do you want to leverage ARKit 3 new capabilities in your mobile apps? We would love to help!