The arrival of iOS 14 brings with it a set of improvements and additions to Vision, Apple’s Computer Vision framework. One of those is the new capability of hand tracking and improved body pose estimation for images and videos. We spent some time testing it and we are sharing with you our findings.

How does body pose detection work with the Vision framework? Is it even accurate?

Let’s put some background first.

Contents

- What’s New in Vision in iOS 14?

- Understanding Hand Tracking & Body Pose Detection

- Pros & Cons

- Use Cases

- Final Thoughts

What’s New in Vision in iOS 14?

In WWDC 2020, Apple introduced some enhancements to its computer vision framework. It’s nice to see that Apple keeps investing in the field. Apart from hand & body tracking, the updated Vision framework comes with other interesting features:

➤ Trajectory Detection: Vision provides the ability to analyze and detect objects’ trajectories in a given video sequence. With iOS 14 a new Vision request was introduced VNDetectTrajectoriesRequest. This has several applications, such as for tracking the path of a ball in sports analysis.

➤ Contour Detection: VNDetectContoursRequest allows you to identify the contours of shapes in an image. It’s useful in scenarios where you need to locate specific objects or group them by form or size.

➤ Optical Flow: The new VNGenerateOpticalFlowRequest determines directional change for pixels in a given image, useful for motion estimation or surveillance tracking.

If you’d like to learn more about everything new in iOS 14, check out Apple’s documentation.

Now, let’s dive into our topic; body tracking with Vision.

Understanding Hand Tracking & Body Pose Detection with Vision

The Vision framework allows to identify the pose of people’s body or hands.

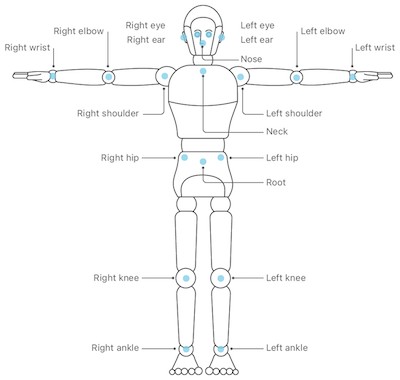

It works by locating a set of points that match with the joints of the human body, from a given visual data.

As you can see in the figure on the right, Vision detects up to 19 body points. This generates a valuable input you could leverage on your apps (we will discuss some use cases later below).

The process of detecting a body pose from a video frame is straightforward. First, you have to perform a request through VNDetectHumanBodyPoseRequest. This is how it looks like:

Now that we have the body points’ position, we drew each of them over the frame, and we linked those points with lines. The following is the result:

As you can see, it seems to be doing well, providing an accurate representation of human movement and body pose. However, it struggles a bit with dark pictures or clothes, such as Darth Maul’s suit. Definitely, the Dark Side of the Force is not Vision’s cup of tea.

We tried it with even more people on screen, also providing a decent result:

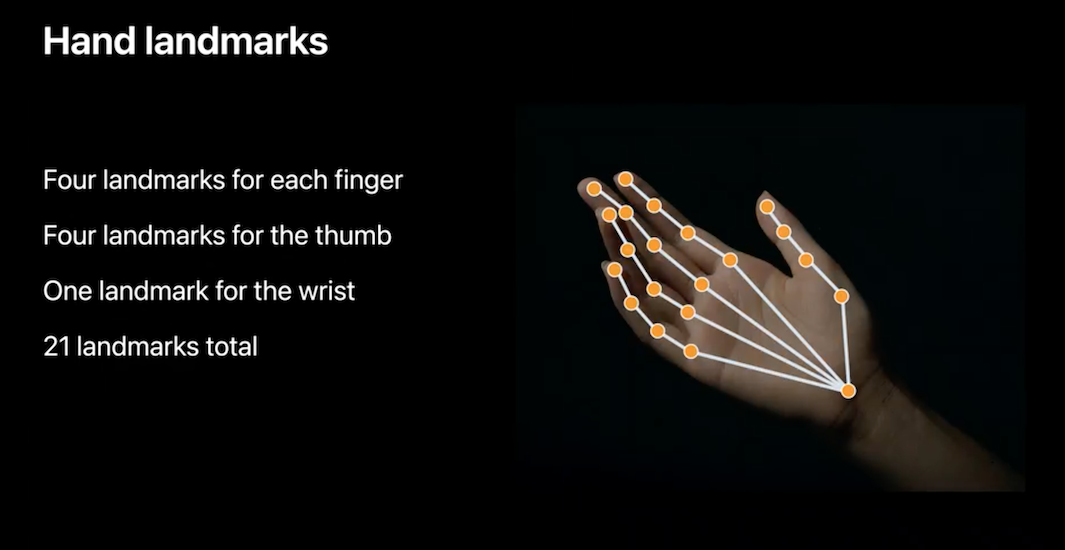

When it comes to hand pose detection, the request changes to VNDetectHumanHandPoseRequest. The procedure looks almost the same as in body tracking. Instead of searching for full-body joints, it focuses on the ones from the hand.

Again, the overall performance is quite impressive:

Not everything in the garden is rosy, though. As we mentioned above, there are some scenarios in which body tracking is not as accurate. We’ll get to that in the following section.

Pros & Cons

The Good of Body Tracking with Vision 👍

➤ The APIs are easy to use and implement in your project. A programmer with basic AVFoundation knowledge should be able to get this feature working in a few code lines.

➤ If the body or hand is being shown completely, either with one or more subjects on screen, body pose detection works well and provides a high accuracy level.

The Bad of Body Tracking with Vision 👎

➤ In cases when the subject is looking sideways, and thus some part of the body gets hindered, Vision still tries to guess these parts’ points’ position. The result is not very realistic.

➤ With poor lighting conditions or in dark environments, the accuracy is reduced a lot.

Having said all of the above, we consider the overall performance to be very impressive. We find exciting use cases for this technology, integrated with AR. Let’s see some of them.

Vision & Body Pose Detection Use Cases

Body Tracking in Retail

Probably, one of the first things that come to your mind when thinking about body tracking applications in retail is the well-known “Magic Mirror”. These have been around for some years now, leveraging augmented reality capabilities. The concept of virtual try-on is not by any means new, but it could benefit from body pose detection by providing more accurate and realistic results.

Probably, one of the first things that come to your mind when thinking about body tracking applications in retail is the well-known “Magic Mirror”. These have been around for some years now, leveraging augmented reality capabilities. The concept of virtual try-on is not by any means new, but it could benefit from body pose detection by providing more accurate and realistic results.

For instance, thanks to Vision’s hand tracking, apps would be able to provide a better virtual try-on experience for hand jewelry or watches. In most cases, AR apps with that feature need to rely on paper wristbands or QR codes to spawn the virtual watch or ring. With hand pose detection, not only you wouldn’t need that, but the overall experience would also be more precise.

Surveillance and security would appreciate body pose detection features as well. By analyzing in real-time the behavior and movement of people with security cameras, along with the implementation of Machine Learning models, shoplifters could be spotted easier.

There are some real examples of this application, such as the Japanese AI Guardman. However, they had to depend on custom body tracking technologies, which are not always the most accurate solutions. Now, Apple offers their own framework for devs to work with, adding new capabilities every year since its launch.

Sports/Workout Analysis

With Vision’s new features and the capabilities of CoreML, there’s a wide range of opportunities for sports analysis. Players would be able to understand their performance better and receive feedback from it.

With Vision’s new features and the capabilities of CoreML, there’s a wide range of opportunities for sports analysis. Players would be able to understand their performance better and receive feedback from it.

For instance, Vision and CoreML allow analyzing ball shooting in sports such as football or basketball. Players can see the trajectory, speed, and angle of their throwing, in real-time. As a result, practicing and improving gets easier. Apple has released a sample app that leverages these capabilities to showcase how sports analysis could work.

When it comes to working out, Vision is useful too. With body pose detection, fitness apps would be able to detect human movements, correct exercises, and also provide more complex and detailed feedback on the training session.

Are you doing your push-ups the right way? Is your yoga pose correct? Now it’s easier for your virtual coach to tell you.

Games & Entertainment

One of the fields that could leverage body pose detection the most is the entertainment/gaming industry. Now, AR experiences could become even more immersive by making possible the interaction between your body and virtual elements.

One of the fields that could leverage body pose detection the most is the entertainment/gaming industry. Now, AR experiences could become even more immersive by making possible the interaction between your body and virtual elements.

Remember the Duck Hunt game from NES? (Wow, the nostalgia) Well, what about an AR mobile game that leverages Vision’s capabilities to detect your hand, allowing you to use it as the gun?

Or imagine being able to pet your Pickachu in Pokémon GO, creating a more realistic experience with the creatures. The possibilities are several.

Games that make use of your body as an input, such as the popular dancing games, would also benefit from this technology. I guess that soon we will see tablets or mobile devices performing like the Xbox Kinect, providing similar gaming experiences. All of these could be possible by combining the strengths of Vision, CoreML, and ARKit.

Final Thoughts

Using the human body as an input has become a matter of interest for developers and tech companies, and it seems that Apple keeps investing in it. For instance, see ARKit’s Motion Capture that was introduced a year ago, or the recently announced Face Tracking. With the improvements and additions to computer vision frameworks, such as Vision, we have more tools over the table to leverage these capabilities in mobile apps.

As with every emerging technology, hand & body pose detection with Vision has its flaws. It doesn’t work perfectly in dark environments, or if you are wearing gloves. However, the results in normal conditions, as shown above, left us a really good impression of what Vision can do.

Are you looking to implement body tracking in your mobile app or software solution? Drop us a line!