We have recently released our support chatbot in Kezmo (our own @kezmobot), implemented with DialogFlow (former API.AI), and this blog post details some of the lessons we learned building it.

Kezmo is a task management & messaging platform for organizations that require an agile internal and external communication. The product includes a support space where users can chat with our team. Up until recently support was handled by a subset of the whole dev team. While this has been great as a way to get insight into our product’s usage it’s also true that pareto holds and 80% of the users tend to ask about the same things, hitting 20% of our knowledge base. Also, answering customer questions during weekend nights is not fun after a while.

So we set out to build our own customer support chatbot.

Why API.AI?

If you are about to build a chatbot you’ll learn there are number of alternatives out there provided by the major players in technology, and then some more. Among them: API.AI from Google (recently acquired), Microsoft Bot Framework, IBM Watson.

API.AI was recommended to us by our good friends at the Tryolabs team. When evaluating alternatives such as the Microsoft Bot Framework we realized that API.AI requires less code plumbing, and overall proposes a more simple approach (higher limits on number of intents, no need for handlers on intent callbacks, no multiple cognitive services and endpoints). Plus API.AI is free, right? After exchanging emails with Google’s API.AI sales team, I’ve learned that:

Our platform is free to use as provided but we do restrict requests per minute to 180 on average. Google Cloud will add paid tiers later in 2017 with new features that align with enterprise requirements and allow for increased request rates.

They said the free version isn’t going away. We’ll see about that. So, for now free means you can make up to 180 queries to API.AI per minute, so you get about two per second. For the time being this works for us.

Getting started with API.AI

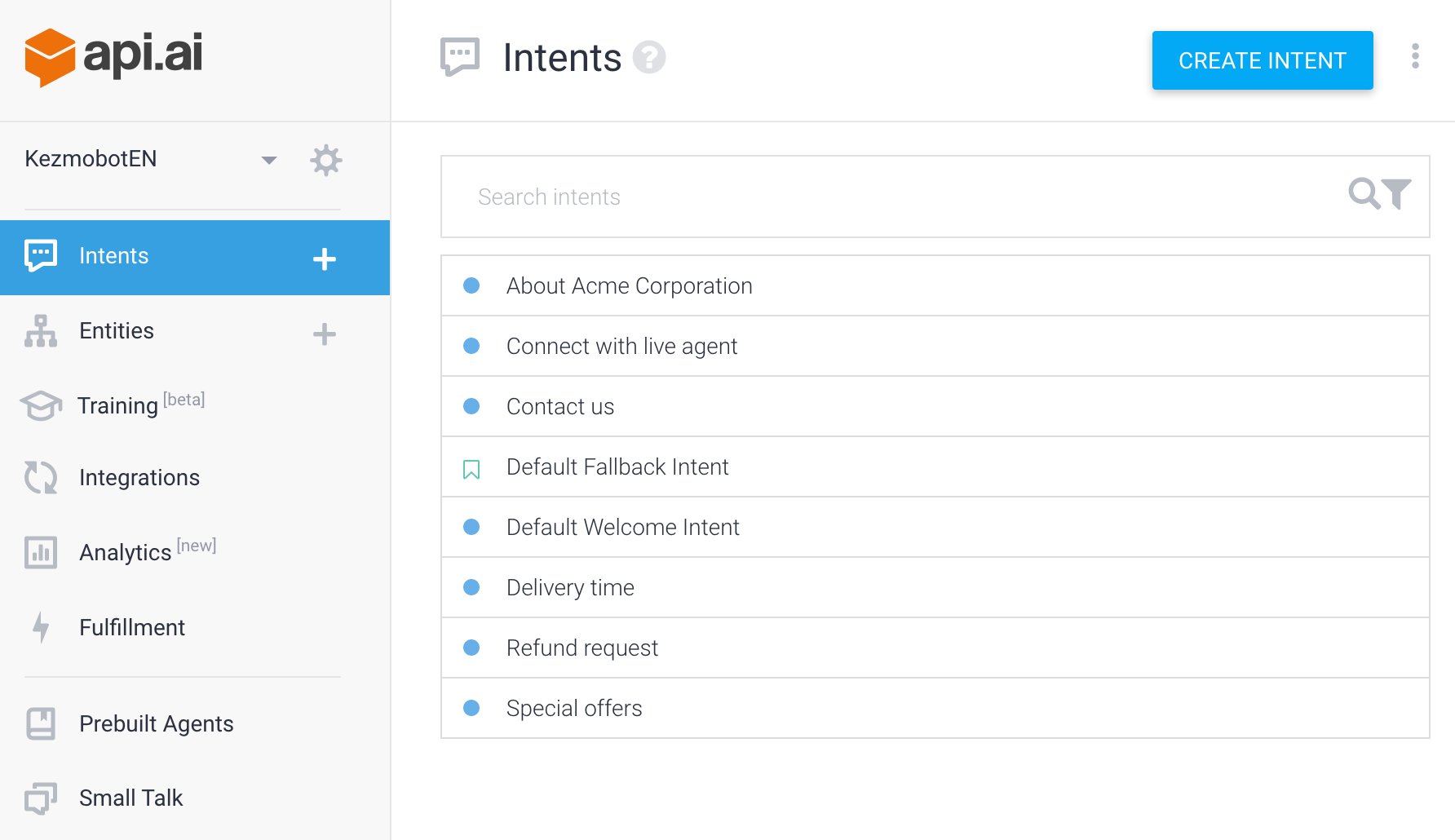

In API.AI chatbots are defined as agents. In API.AI you define an agent based on intents, which are the conversations interests it can handle. For each intent you define a number of phrases, texts, used to train the model. So that whenever a similar phrase is found that intent is matched. In our experience, in API.AI you should think about loading around 20 sample sentences for each intent for the model to behave reasonably good.

You connect to your agent using any of the supported out-of-the-box channels, such as Facebook Messenger, or Skype, or you can code your own client using any of the supported libraries to connect with API.AI. Since we wanted our bot to run on Kezmo, our own messaging platform, we had to program the integration.

You connect to your agent using any of the supported out-of-the-box channels, such as Facebook Messenger, or Skype, or you can code your own client using any of the supported libraries to connect with API.AI. Since we wanted our bot to run on Kezmo, our own messaging platform, we had to program the integration.

When you create an agent you can base off existing sample data which preloads the agents with typical intents, or you can import prebuilt agents, which also preload intents into the new agent.

We wanted our bot to handle basic small talk, so we imported the small talk pre built agent, which loaded 84 intents. The imported intents do not include preloaded answers. So expect to spend some hours loading content in order to enable casual conversation skills.

Then we moved on to load our FAQ knowledge base as intent responses. In order to do this we combed all our support conversations for the past six months to identify intent patterns, and mine typical phrases used to express intents. This took days. We ended up with more than +500 sentences expressing intents.

Output formatting of the chatbot responses in API.AI

For a first version we loaded the answers directly to API.AI, I know we could have implemented an action, and a fulfillment to retrieve them from some endpoint but not for version one (make it work, make it right, make it scale).

The first issue we faced was supporting line breaks in the response texts loaded in API.AI. For some reason this gets lost in the client call, so we had to include markup (\n) and a basic post processing code for the API.AI calls to replace \n occurrences for actual line breaks in our messaging platform.

Implementing the bot human handoff with API.AI

Kezmobot is a customer support agent meant to act as first level support, so we need it to be able to escalate questions in a number of scenarios, including when the user explicitly requests to talk to a real person. Our support channel is a group chat, which means it’s key that the bot remains quiet once the human agent takes over the conversation, otherwise you get the nightmare scenario where the chatbot answers both the client and the human support agent.

Transferring chat to a human agent is not something API.AI supports out of the box. You need to add some client code in order to implement this sort of behavior.

In our case we relied on a hand off context, that whenever returned as output of an API.AI call the client code would simply sleep the bot for 10 minutes, for the current client conversation (it quickly became clear it needed to be more like 12 hours).

Since we wanted to achieve a natural looking transition we created an intent that would match phrases such as: @kezmobot I’ll take over from here. This intent outputs the QUIET-MODE context notifying the client code to stop passing calls for that conversation, and that’s how kezmobot goes silent.

Handling sentences with multiple intents with API.AI

After combing our support log we realized humans have a tendency to pack multiple intents in one sentence. Interactions like the following are common:

Hello, how are you doing? I wanted to ask about X feature, how does it work? By the way I love your product, thanks!

Here we have at least, 3 intents, like:

- Opening Greeting

- Feature question

- Closing thank you

These type of sentences can lead to a mismatch of intents. The model may match any of the three intents, leaving two unanswered. What we did was implement a very basic pre processing of the text we send API.AI to recognize stop tokens, used to split the sentence in multiple calls. For instance, we would split the sentence on occurrences of substrings such as: . ! ? which would output something like the following:

- Hi

- You can use feature X in this or that way.

- Glad you like our product! (would be great if you could post a good review on the store!)

That’s half of the solution. The other half is adding in our welcome intent wording to let the user know our bot works best with short simple phrases 😉

Measuring your chatbot accuracy

We loaded a CSV file with two columns. On the left column a sample text from our support logs. On the right column the expected intent for each phrase. Then we ran a process that would call API.AI using each line in the CSV file, and check the output against the expected intent. The difference between expected, and actual was used to calculate the % of accuracy.

One caveat is that you have to sleep your calls to API.AI to avoid going beyond the allowed throughput for the free service.

Between executions we tuned the ML Settings for the agent. Basically we played with different numbers for the ML Classification Threshold. We settled (for now) on a 0.5 value, which worked best for us to avoid false positives.

Implementing a chatbot that can handle multiple languages with API.AI

A big part of Kezmo’s user base is from spanish speaking countries, so it was key for us to support multiple languages.

They way to implement this is using multiple agents, one trained for each language.

Currently we have released the bot for spanish, and we are working on tunning the english version of it.

The good thing is that with our approach the client code does not care about the spoken language, or even the intents at play. We rely only on existing contexts, that must be shared among languages. What sets them up is transparent for the client code.

Chatbot analytics on API.AI

For the time being API.AI analytics are pretty basic. It’s rumored that they will open Chatbase to the masses some time. In the meantime log your own stats in your database.

Closing Thoughts

API.AI is adding features on a monthly basis. Business model is still not rock solid. But it’s simple, and gets the job done. It provides a good balance between expressive power and simplicity of the whole developer experience.

Real world usage of our chatbot is not like our human interactions, and now we are working on a second round of tuning after real interactions people are having with our bot.

If you want us to check your support process to see if we can implement a chatbot for it drop us a line, we’d be happy to help you!