Artificial Intelligence has become a staple in our daily workflows as designers. At OrangeLoops, tools like ChatGPT, Copilot, Gemini, and Figma AI already influence how we brainstorm, prototype, and collaborate. But lately, a new wave of AI-driven platforms has caught our attention: Prompt-to-UI tools.

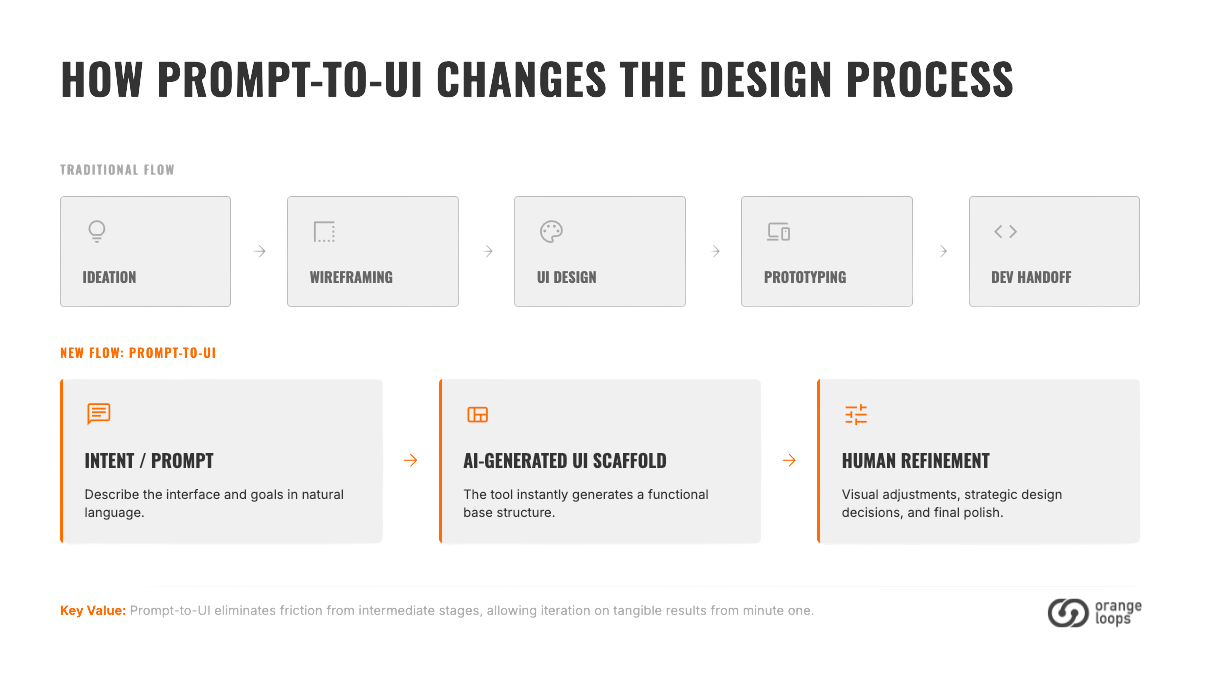

These platforms promise something that feels both exciting and slightly unsettling — the ability to describe a product in natural language and instantly generate a working interface. No wireframes, no pixel pushing, no long developer handoffs. Just a prompt, and a product appears.

It’s a bold claim. The question we asked ourselves was simple: is this more than hype?

Why Prompt-to-UI Feels Different

Design tooling has always evolved around one idea: closing the gap between concept and execution. Sketch redefined digital design by giving creatives a tool purpose-built for interfaces. Then Figma brought collaboration to the cloud, making real-time co-design the new standard. The rise of no-code platforms like Webflow, Framer, and Bubble empowered designers and entrepreneurs to launch functional products without writing traditional code — though a basic understanding of how the web works still goes a long way. Meanwhile, design systems such as Material Design and Apple’s Human Interface Guidelines introduced order and scalability, helping teams ship faster while staying consistent.

Prompt-to-UI takes the next leap by rethinking how interfaces come to life. Instead of manually composing layouts and visual systems, designers can guide the process through thoughtful prompts that express intent, structure, and tone. These tools aren’t limited to text either — you can upload references, attach UI assets, or click directly into generated screens to refine components, adjust styles, and iterate visually. In many cases, AI and human input flow together, blending automation with crafted design.

That’s why we felt compelled to explore it ourselves. At OrangeLoops, Product Discovery plays a defining role in how we design and build products, so any tool that accelerates early exploration without sacrificing quality is worth a closer look.

Our Experiment

We chose three platforms that seemed representative of the category: Lovable, Figma Make, and V0. Each designer on our team was assigned one, under the same challenge: create a lightweight survey app called Fun Survey!

The idea behind Fun Survey! was intentionally simple — an internal tool where teams could answer anonymous multiple-choice surveys, with admins creating questions and viewing aggregate results. It was small enough to be built in a day, yet complete enough to test real product flows like authentication, navigation, and survey creation.

Armed with a product brief (generated with the help of ChatGPT), we each spent a day experimenting with our assigned platform. By the end, we regrouped to share findings and compare experiences.

First Impressions

Of the three tools, V0 stood out the most. Unlike the others, it generated code our developers considered usable. By leaning on shadcn/ui with Tailwind, it delivered components aligned with modern development practices. This alone made the outputs feel more production-ready than mere design demos.

The initial setup felt surprisingly smooth. We were able to publish our prototype without major friction and even sync it with GitHub, which meant our development team could pick it up right away. That integration bridged design and engineering in a way we hadn’t seen before with AI-driven platforms.

Another pleasant surprise was how iterative the process could be. Once the first version was generated, we refined it through follow-up prompts — adjusting layouts, tweaking navigation, and polishing flows. While the results weren’t always perfect, it created a sense of conversation with the product, where improvements happened dialogically rather than through manual edits alone.

Where Reality Hit Harder

But the experiment also revealed clear limitations. Prompt-to-UI tools shine at the big picture, but they stumble at the details. Small adjustments often took as long — or longer — than major changes, and sometimes they broke other elements unexpectedly. The absence of granular undo meant we had to be cautious, since rolling back meant reverting to an earlier version entirely.

Another challenge was consistency. Not all screens came out with the same level of polish. Some felt cohesive and ready, while others required hands-on design work to align spacing, hierarchy, or visual rhythm.

And then there was complexity. As soon as we pushed beyond basic functionality — like handling authentication, database integration, or security — we hit walls. Our developers could step in to patch things, but the promise of a “no-code” experience started to fade quickly.

Perhaps most importantly, we noticed how ambiguous the AI’s feedback could be. At times, it told us changes were applied when they weren’t, forcing us to second-guess the outputs. That uncertainty slowed us down rather than accelerating us.

What This Means for Designers

The main takeaway for us was not that Prompt-to-UI tools are replacements for design or development, but rather that they shift the starting point. Instead of beginning with a blank Figma file, you start with a scaffold that already feels like a product.

This makes them particularly powerful for discovery and exploration. In early conversations with clients or stakeholders, being able to spin up a working prototype in hours instead of days can change the dynamic entirely. You’re no longer debating abstract wireframes — you’re interacting with something functional.

At the same time, these tools are not yet suited for projects with higher complexity or where fine detail matters most. They accelerate breadth, not depth.

Key Takeaways

Here’s what we learned from our first exploration:

- Prompt-to-UI platforms are great for fast experiments with low-complexity products.

- They create functional MVP scaffolds that developers can actually work with.

- Iterating through prompts feels natural, though not always predictable.

- Collaboration improves when outputs can sync directly to GitHub.

- Fine-grained adjustments remain inefficient and sometimes risky.

- Despite the “no-code” pitch, some technical fluency is still required.

Final Thoughts

Our experiment confirmed that Prompt-to-UI tools are more than just hype. They can accelerate discovery, reduce friction in early MVP development, and spark more engaging conversations with stakeholders. But they’re not silver bullets.

Think of them as accelerators, not replacements. They compress the time from idea to something you can click on. But design craft, human judgment, and solid development practices remain essential.

In that sense, they resemble the early days of no-code: initially dismissed as toys, but eventually finding a serious place in product workflows. Whether Prompt-to-UI matures into a mainstream tool or stays a niche helper remains to be seen — but either way, it’s a trend worth following closely.

At OrangeLoops, we’ll continue experimenting, blending human design expertise with these emerging AI capabilities. Because while hype fades, the search for better, faster, and more impactful ways to design products never does.

👉 Want to see the actual product brief we used? You can check out the Fun Survey! Document here.