At Orangeloops, we’ve built an internal HR assistant called OLivIA, designed to answer employee questions around benefits, policies, company information, and more. OLivIA interacts with users through Slack, leveraging a structured decision-making process powered by a large language model (LLM).

In this article, we’ll explore two different approaches to orchestrating OLivIA’s workflow:

- One using LangGraph, a library for building stateful, agent-based workflows in code.

- The other using n8n, a visual low-code platform for building automation flows.

By replicating the exact same logic in both systems, we aim to provide a clear, hands-on comparison between these tools in terms of flexibility, complexity, developer experience, and integration with LLMs.

What is LangGraph?

LangGraph is part of the LangChain ecosystem, built to support structured, stateful applications with LLMs at their core. Rather than thinking in terms of linear workflows, LangGraph introduces the concept of a state machine where each node represents a unit of computation, and edges determine transitions based on state or conditions.

LangGraph allows you to:

- Compose workflows programmatically using Python or JavaScript.

- Maintain context through a shared, evolving state object.

- Leverage decision logic and branching directly in code.

- Seamlessly integrate with LangChain’s ecosystem, including its tools, memory management, and agents.

This makes LangGraph especially well-suited for applications where the workflow needs to adapt dynamically to user input, such as conversational agents, assistants, and decision trees.

What is n8n?

n8n is a powerful open-source workflow automation tool with a visual interface. It lets users connect APIs, services, and logic using nodes and edges in a drag-and-drop environment.

Key features include:

- Visual workflow builder.

- Pre-built integrations for hundreds of services (Slack, Google Sheets, HTTP, OpenAI, etc.).

- Logic nodes like IF, Switch, Merge, and Wait.

- Support for executing custom JavaScript code.

While n8n was not explicitly built for LLM-based workflows, it offers enough flexibility to orchestrate one through API calls and conditional logic, making it a low-code alternative to LangGraph for building automation powered by language models.

Conceptual Comparison

| Feature | LangGraph | n8n |

| Approach | Code-first | Visual-first |

| Programming model | State machine, conditional logic | Node-based, flowchart-like |

| LLM integration | Native via LangChain | Provides OpenAI API integration |

| Flexibility | Very high | High for simple/medium workflows |

| Observability | Limited (unless manually added) | Built-in visualization and run logs |

| Learning curve | Steeper (Python required) | Easier for non-developers |

| Deployment | Requires infrastructure setup | Self-hosted or managed via n8n Cloud (paid option) |

Practical Example: OLivIA, the HR Assistant

Use Case

OLivIA is designed to assist employees through Slack by answering questions related to company information like policies, referral programs, and benefits. Based on the message, OLivIA routes the query to the appropriate node and provides a helpful response.

Architecture with LangGraph

Architecture with n8n

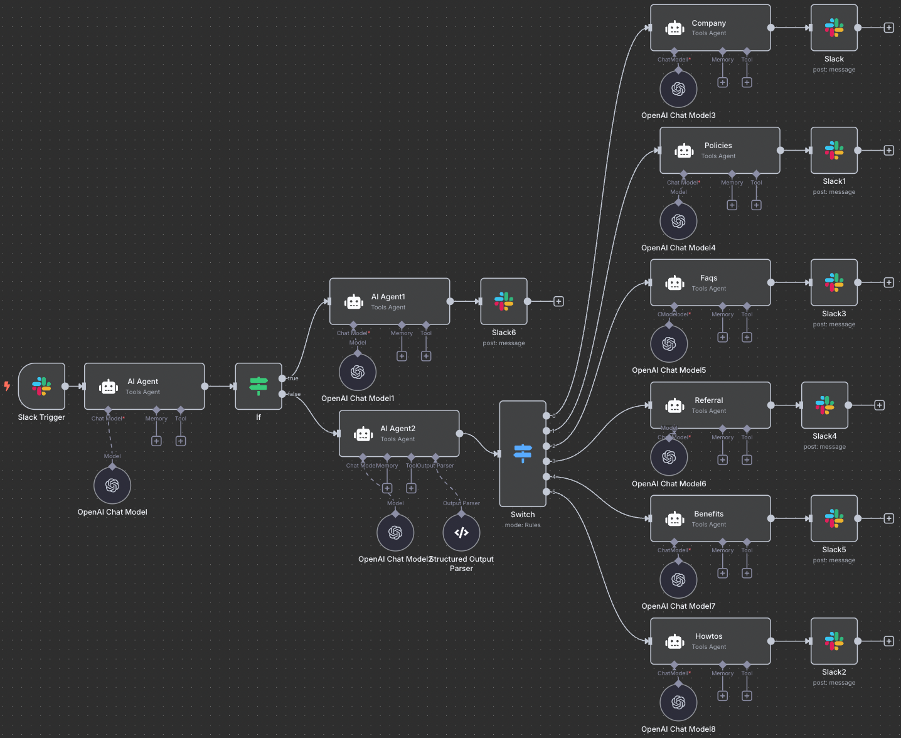

In n8n, the workflow is constructed visually using a series of connected nodes that reflect both control flow and logic. Here’s how the OLivIA agent is implemented:

- Slack Trigger: The workflow is initiated when OLivIA receives a message in a designated Slack channel. The content of the message is extracted from the Slack payload.

- AI Agent Node: This node takes the user’s message and generates a response using a system prompt designed to classify the message according to HR-related categories (e.g., company info, policies, FAQs, etc.). It uses OpenAI’s GPT model behind the scenes, with structured prompting to control the output format.

- Structured Output Parser: The response is parsed to extract a structured field called “step”, which determines the topic or type of question being asked.

- Switch Node: A Switch node evaluates the step value and routes the workflow accordingly.

- Final Response: After routing, the appropriate message is generated (often with another OpenAI node using a topic-specific prompt) and is returned to Slack.

This architecture leverages n8n’s strength in orchestration and conditional logic, while still using large language models for understanding and responding to user intent in a modular and maintainable way.

Practical Comparison

Development Speed

- n8n was faster to prototype due to its visual interface.

- LangGraph required more setup but offered finer control and better structure for complex logic.

Flexibility

- LangGraph made it easier to model dynamic conversations and maintain complex state across multiple steps and turns. Its built-in support for state management allows agents to update and use memory across looping structures or non-linear paths.

- n8n excels at linear and branched workflows, where passing data from one node to the next is straightforward. However, modeling more dynamic dialog patterns (e.g., maintaining memory across Slack conversations) may require custom implementations or external memory storage.

LLM Integration

- LangGraph integrates directly with LangChain agents, allowing fine-grained control over prompt construction, tool usage, and agent behavior.

- n8n provides built-in nodes for OpenAI and other LLM providers, making it easy to call models without extra setup. While it doesn’t offer dedicated agent abstractions like LangChain, n8n allows for modular workflows that emulate agent behavior using a combination of LLM calls, parsing, and branching logic.

Observability & Debugging

- n8n’s UI made it easier to trace flows and debug issues.

- LangGraph flows required custom logging or external tools.

Evaluation and Validation with LLM-as-a-Judge

To evaluate the performance of OLivIA in both LangGraph and n8n implementations, we introduced an automated test harness using an “LLM as judge” pattern. A curated dataset of 47 representative questions was fed into each version of the agent, and the resulting answers were evaluated by a second LLM configured to provide structured feedback on accuracy, completeness, relevance, and clarity.

| Metric | LangGraph | n8n |

| Total questions | 47 | 47 |

| Average score | 7.99 | 7.74 |

| Excellent (>8) | 27 | 17 |

| Good (7-8) | 13 | 21 |

| Fair (5-6) | 3 | 3 |

| Poor (< 5) | 4 | 6 |

LangGraph and n8n both performed well in terms of average score, but LangGraph showed a higher number of questions marked as excellent, while n8n showed a higher number of questions marked as good.

Which one should you choose?

Choose LangGraph if:

- You want maximum control of the code. LangGraph lets you treat each step as a strongly typed function and wire them together with explicit state transitions, so you can implement sophisticated memory management, looping, or fallback strategies without fighting the tool.

- Dynamic or deeply branched conversations matter. Because every node can update a shared state object, it’s easier to keep long-running context, build multi-turn agents, or pivot mid-flow when the user changes direction.

- You’re fine managing your own stack. LangGraph deploys as plain Python or JavaScript—ideal for unit testing, CI/CD, and custom monitoring—so log storage, tracing, and scaling are all on you.

Choose n8n if:

- Speed and approachability trump low-level control. Drag-and-drop nodes, instant execution logs, and built-in service connectors mean you can stand up a proof of concept in minutes and hand it off to non-developers later.

- Your workflow is mostly linear or mildly branched. n8n excels when data flows from one node to the next with a few conditional forks; adding complex recursion or cross-cutting state is possible but can be verbose.

- You value observability out of the box. Every run is replayable in the UI, making debugging easier than digging through ad-hoc logs.

Conclusion

Both LangGraph and n8n offer powerful ways to build workflows that integrate AI agents, but they serve different needs and audiences.

LangGraph shines when you need complete control over your agent’s behavior, including memory management, dynamic tool usage, and nuanced flow logic. It’s better suited for developers comfortable with code who want to build sophisticated, custom agent systems.

n8n, on the other hand, is ideal when you want to move fast and iterate visually. It offers out-of-the-box LLM integrations, easy state passing between nodes, and a user-friendly interface to orchestrate logic across systems. While it lacks some of the agent-specific abstractions of LangGraph, it’s surprisingly capable of handling many real-world AI workflows, especially task-oriented and modular ones.

To validate both approaches, we implemented an evaluation pipeline using a second LLM as a judge. While both agents achieved similar average scores, LangGraph delivered a higher proportion of “excellent” answers, and n8n delivered more questions marked as “good”.

In our case, we found that LangGraph gave us fine-tuned control for prototyping our HR agent’s reasoning, while n8n made it easy to deploy that logic into production-ready workflows and integrate it with platforms like Slack. The combination of both turned out to be a pragmatic and effective solution, leveraging LangGraph for precision and n8n for execution.

Looking to build your own AI-powered agent or integrate LLM workflows into your business? Let’s discuss your next project — we help startups and enterprises turn ideas into real, scalable solutions.