UPDATE: ARKit 5 is here! What’s new?

ARKit 2 is the latest framework from Apple that allows developers to work with Augmented Reality in iOS. When it launched in 2018, ARKit 2 brought new features and improvements in comparison with the previous version, but yet it has a long way to go.

Our dev team spent some days researching and playing with it in order to find out how far it could go, and here are the conclusions.

Which things does this framework do well? And which ones not so much? Let’s take a look at the Good, the Bad and the Ugly of ARKit 2.

The Good about ARKit 2

Plane detection technology

ARKit 2 from stock includes many different tools in order to create a rich AR experience. One of them is the ability to detect horizontal and vertical planes by using the device’s camera and tracking what is known as features points.

When the framework algorithm recognizes these points aligned in a real-world flat surface, it returns this information as an ARPlaneAnchor object, which contains data of that plane and represents the link between the real one and its virtual counterpart to be tracked throughout the AR session.

This information includes the general geometry of the detected plane, along with its center and dimensions, these being updated as new information from reality is discovered by the device. On supported iOS devices (with A12 or later GPU), ARKit attempts to classify detected planes into the categories of walls, floor, seats, ceiling, tables or none if no of the listed corresponds. This additional data that the algorithm reports to the application might be of interest, for instance, to spawn certain objects on floors and not over every detected horizontal plane, allowing a more engaging AR experience.

Spawning virtual objects with their relative movement

As part of an enhanced augmented reality experience, virtual objects should behave as closely as possible to real ones. In particular, relative movement and parallax effects allow this greatly, simulating that objects are placed in the world itself and not just on the device’s screen with no relation to its surrounding.

ARKit 2 is very successful at this task, tracking the user’s movements throughout the AR session and adjusting the virtual objects positions in consequence, relative to a set of initial coordinates.

This requires almost no effort at all by the developer’s hand, with the template being created as soon as you start a new project, showing an object (a spaceship asset) placed in the scene with the capability of letting the user move around it and see it from all sides. Therefore, this is a very strong feature of ARKit 2 especially considering how easy it is to work with.

Recognizing exact images

With our research of the ARKit 2 framework, we looked for solutions for image recognition in order to add virtual objects, like texts or even videos to identified images (see the example below). A use case for this feature could be showing information about a company when its logo is recognized.

ARKit has a built-in image recognition system to which you can feed digital images that will be then recognized in the real world. SceneKit then allows you to perform certain actions once the image has been recognized by the software.

This was accomplished with ease because, at the moment of loading the images, Xcode possesses an interface that analyzes them and gives the developer advice in regards of the image’s quality to ensure a more precise recognition. For instance, characteristics like size, colors or even design are taken to consideration.

After running several tests and following Xcode’s feedback, we could experience that the system does a reliable comparison of each image and makes sure they match exactly. Having said this, pictures such as landscapes with a wide variety of colors and shapes are identified in a good way as they are processed by their color histogram. Problems appear with simpler ones, something that we’ll discuss later in this post.

Realistic physical characteristics of an object

One of the approaches that we wished to cover was the ability of virtual objects to interact with each other and to have behaviors based on real-world physics.

ARKit 2 brings the feature of adding physical characteristics to objects, like defining its mass. For instance, setting mass in virtual objects with oval bases such as a boat makes that when you move the plane where it is sustained the object will start to stagger.

Furthermore, the framework is capable of applying physical forces to virtual objects generating, in consequence, a reaction from it like moving forward or even falling if it is pushed away from the base-plane. This kind of physic properties improves the realistic appearance of virtual objects and the AR immersion.

Integral and adaptive architecture

In terms of its architecture, it might be worth mentioning that ARKit is similar (regarding its functionality into classes division) to other technologies that involve 3D object interaction, such as those pertaining to game development as the Unity engine.

The concepts and terminology the framework handles, like Nodes, Anchors, PhysicsBodies, Shapes, among others, are present in some way and can be mapped to other technologies the developer might be more familiarized with, which is convenient in terms of speeding the learning process, pretty steep either way.

Apart from that, through our experimentation process, we found that it offers some possibilities of being integrated with other frameworks or libraries. In particular, for instance, a UIView (from iOS’s UIKit) can be placed in an augmented reality context, as a result of detecting a plane or image enabling then for buttons to be set in the user’s view and allowing interaction in this context or playing videos on some surface.

Besides, when testing image recognition, it is possible to integrate ML Core which is an interesting option when extending the capabilities of ARKit in this sense is needed.

The Bad about ARKit 2

Plane recognition is not always accurate

Even though the process of recognizing horizontal and vertical planes works fine in most cases, there are some edge cases where it doesn’t. When this happens, the feature points (that allow to identify planes) detected by the camera in the real world are not always “visible” because of certain circumstances of the context, such as the lighting, colors, and shadows.

For instance, a white wall or an uniform-color table don’t generate the specific characteristics needed for the camera to represent the feature points and to be able to work with. Take a look at the image below:

We executed several tests where the goal was to identify a completely white table, with nothing over it, and it was impossible to recognize (image 3).

Then we put some stuff on it, like pencils or even a keyboard, and after the software identifies them, it quickly did the same with the table (image 1-2, being the plane represented in brown). Although this may be related to limitations in the camera aspect of the device, we still think that there’s a lot of room for improvement.

Images identification only works well within strict parameters

Previously, we talked about the features that come with image recognition in ARKit 2, such as the ease of working with it or the feedback that the IDE provides in order to load identifiable images to the project.

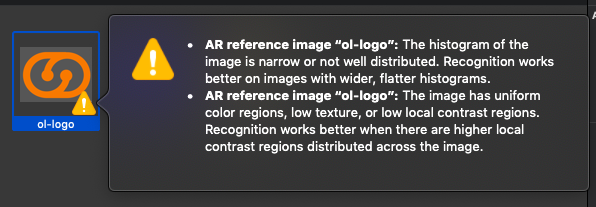

However, this is not a very flexible feature and minor changes in, say, the image’s color pattern make it difficult or impossible for the software to recognize it. Also, if the image is too simple, or it’s color range is too narrow, it becomes also very hard to identify and that is what Xcode is reporting in the image below:

As a solution to this problem, it would be interesting to include a trained system of machine learning in charge of recognition, letting ARKit only care about the AR part.

Bring your math skills

Creating a realistic AR experience, one that emulates real object behavior particularly if there is movement or physics involved, is not an easy task. Even though the ARKit engine takes on much of the hard work the level of math is probably beyond the typical dev comfort zone in order to implement a lifelike experience in the app.

For instance, one point that may cause some issues to implement correctly is, in the first place, converting from 2D screen/view coordinates to 3D ones in the virtual world (done through hit testing), especially considering each Node’s coordinates are given in relation to its parent’s coordinate system. Different data structures and types are used for all these coordinates, involving vector and matrix transformations to operate over them, which although efficient from a technical point of view, they are not easy to understand for someone new to this kind of domain as they involve mathematical concepts such as linear transformations and quaternions. Most of this is thankfully encapsulated in easy to call (but difficult to understand and master) function and methods.

Regarding the physics aspect of some possible AR project, several concepts are needed to configure bodies and joints correctly, as well as how they intertwine together such as mass, force, impulse, static and dynamic friction constants, etc. Even though it may be possible to set these through trial and error, some physics background is definitely helpful when working with ARKit in order to make interactions like collisions more realistic.

Finally, we found that at some points, due to jitter errors when receiving data from ARKit’s detection system, at the time of tracking the position of a certain image, for instance, or when plane anchor dimension’s change, it is at times useful to establish some error thresholds before updating or taking any action, and perhaps using linear interpolation to smooth out movements and make them more realistic. These are not that easy to implement in an effective way, making the framework as a whole quite hard to master.

ARKit hardware requirements

As it’s expected, this framework requires an iOS device with an A9 processor (iPhone 6s or later), and minimum iOS 11, while some features are only available with an A12 processor onwards.

Apart from that, in terms of phone resource consumption when executing an AR application programmed with ARKit, we noticed a significant drop in battery percentage after some minutes of use.

This could be an interesting point to keep in mind when developing mobile applications in certain contexts, as battery consumption is a crucial aspect.

iOS’s only

As with many things Apple ARKit is an iOS only framework not available for Android apps. If you are serious about cross platform and feature parity you will need to bring the same capabilities to iOS & Android. Instead of having your team learn two frameworks and two ways of doing things you may want to evaluate implementing a library that supports both platforms, such as ARCore.

The Ugly about ARKit 2

Cannot identify whether the camera’s vision is hindered when spawning virtual objects

As we have said earlier in this post, spawning virtual objects is pretty easy and works fine. Problems start to appear when the camera’s vision gets hindered and that virtual object remains visible. The system is not able to realize that it shouldn’t display it as can be seen in the example below.

Granted, this problem is hard, and a proper implementation would probably require 2 cameras to measure depth. The ability to easily blend spawned objects with existing ones, remains a challenge not solved out of the box by the current ARKit version. Which totally breaks the illusion of the virtual object actually being there.

Poor object recognition (compile time & unreliable)

As it was mentioned before, ARKit offers the possibility of recognizing specific known objects or images in the real world. This works as long as the objects to recognize meet certain quality standards (high color contrast, no repetitive patterns, etc.) AND objects were scanned properly (from different angles, adjusting the bounding box as corresponds, etc.).

Still, there are some key limitations in this, according to our testing.

The first is that both image files (with the .png extension, for instance), and 3D object data to be detected by the application, with the .arobject file extension, have to be included in compilation time as objects in a resource group to be handled by the application. This implies that all objects and images to be identified have to be known beforehand when developing the application itself.

According to our investigation, ARKit offers no chance of detecting or categorizing 3D objects (eg. recognizing a certain object as a cup of coffee) other than this route, with the exception of plane surfaces. Below is shown the process of loading the information about a real object in order to allow ARKit to recognize it later:

The second is that ARKit uses an algorithm that compares the images captured by the device’s camera with such files, and returns a result based on how similar what is seen is to them, without any sort of artificial intelligence or learning behind the process.

This means that detection suffers greatly when conditions vary, such as lighting, sight angle changes, etc. This is something to look out for if the detection of objects is crucial for the application in changing contexts, and can be improved either by improving the information ARKit is provided with, or possibly by integrating it with machine learning models trained in recognizing the desired object.

Final thoughts

These were our findings about the ARKit 2 framework after spending some days playing with it. Even though it brings interesting capabilities to mobile app development, it still feels a technology that’s in an infant state. Leaving many responsibilities up to the developer of things one would expect were solved, or at least approximated better out of the box.

Are you thinking about implementing Augmented Reality in your next mobile project? Get in touch!